After graduating Eastman in 2002, I got involved with music informatics research and spent several years developing music search and discovery technologies. During that time, I was exposed to emerging AI that led me to imagine potential solutions to existing challenges in computational creativity. Today, I’m working to develop methods for human and software collaboration with the goal of generating novel musical expression grounded in familiar perceptual structures.

Unlike other approaches to computational creativity, I’m not interested in directly modeling the creative process in humans. Instead, I’m searching for a musical result that speaks emotionally through a context-aware, emergent grammar — a language that feels natural and engaging, even when its surface is unfamiliar. It’s a tall order, and something that I’ll be working toward for a long time to come.

What is Isomer?

Since early 2012, I’ve been developing my own software (called Isomer) which allows me to move flexibly between interpretations of fixed audio sources, symbolic performances, and detailed orchestrational renderings. (You can read more about how Isomer thinks about music here.)

What makes working with Isomer unique is the advanced role the software plays in musically interpreting the raw input data. Over time, Isomer is learning how human-composed music creates and satisfies expectation (emotional tension) and applying this knowledge to the output it generates.

Making Machines Musical

Applying deep learning technologies to artistic expression is a popular trend. But even in the best cases, this approach generally results in simple, distorted copies of the original input. While it can provide uncanny effects, the results remain tightly bound to limitations within the input models. In my view, creativity requires the ability to forge new connections between existing contexts, and the missing ingredient with current machine learning approaches is the conscious application of context within the creative medium. Or put more accurately: the contextual classification of musical elements critical to defining musical intent and expectation.

These critical elements do not appear to reveal themselves with current deep learning methods which is why Isomer must become capable of deciding whether or not to apply learned rules to guide musical expression within an ever-changing artistic context. A simple example of this might be the application of a crescendo to a rising melodic line. There isn’t a single, ideal crescendo that will work in every case. Determining whether or not it’s an appropriate addition, and working out exactly how it should be executed, depends heavily on the melodic, harmonic, and timbral environment. Even if Isomer can learn how to apply an appropriate solution, the contextually-dependent decision whether or not to do so still remains.

More complex examples include: how to finalize a musical gesture (i.e. registral return, agogic accent, dynamic contrast, none/all of the above?), how to affect a harmonic transition (i.e. adjust harmonic tension within a specific context), and how to orchestrate evolving variations of a specific melodic idea. These examples show how important the interpretation of musical events within their near-term context can be, and it’s for this reason that context awareness is the primary focus of ongoing development. And so with that in mind, let’s take a look at how I collaborate with Isomer throughout the composition process.

Creative Collaboration: Isomer’s Role

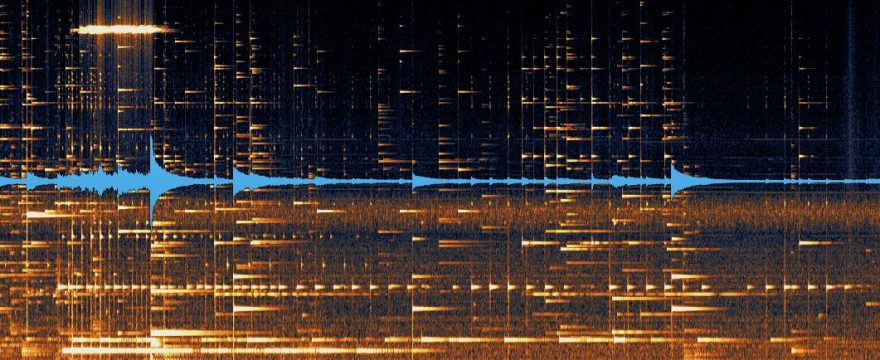

Isomer’s first job in the process is to reverse engineer audio input to determine how its spectral components change over time. Additionally, Isomer searches for perceptually important transient attack points or “event onsets”. Each event onset defines an adaptive analysis window that provides rhythmic context and helps Isomer develop large-scale formal pacing.

Within each window, partial data is extracted, normalized, and stored. A picture of the composite harmonic layers is assembled (from bottom to top), allowing Isomer to define a series of layered monophonic lines. These lines are then shaped into useful musical material using learned principles of musical expectation and universal construction.

Once the model is established (click here for details), Isomer can search for musically relevant patterns and modify the output based on composer input. For some applications, the composer may wish to keep the original analysis generally intact, while in other cases, it may be desirable to ask Isomer to add, remove, or modify events to create a result that is more predictable and universally appealing in its construction.

Creative Collaboration: The Composer’s Role

What’s left for the human composer to contribute, you ask? First, the composer must curate the input source(s). Whether it’s a series of short “found” sounds or a meticulously crafted concrète work, the input source has a significant impact on the harmonic content and dramatic pacing of the resulting work.

Speaking of output, Isomer’s analysis often results in hundreds of tracks, but only a few (maybe 24 or so?) are likely to be used in the competed work. The composer must evaluate the potential for each of these output tracks and determine which will make it into the piece.

In early versions of the software, the composer completely determined the orchestration. Today, Isomer sets out a basic orchestrational plan which must be modified by the composer. In future versions, Isomer will evaluate the timbral signatures of orchestral options to determine the most musically useful combinations of instruments to employ within the varying emotional states of the work.

And finally, the composer must digitally realize and produce the resulting piece. Digital production isn’t traditionally considered part of the compositional process, but for me (as with many composers of electronic music) it is. Machine listening is still a long way from dealing with detailed production choices, and these decisions can have an enormous effect on the resulting piece. So for now, production must be up to the composer.

A Final Thought

Keep in mind that while exploring creative collaboration with computers is an interesting topic, these process details really don’t define anything too important. What’s truly important is the experience gained by listening. And that will always remain the biggest challenge for anyone involved in creative pursuits — computationally collaborative, or otherwise.

Leave a Reply