It’s widely accepted that music elicits similar emotional responses from culturally connected groups of human listeners. Less clear is how various aspects of musical language contribute to these effects.

Funded by a Mellon Foundation Research Grant through Dickinson College Digital Humanities, our research into this questions leverages cognition-based machine listening algorithms and network analysis of musical descriptors to identify the connections between musical affect and language.

We drew our experimental corpus from music libraries used in advertising because they have been shown to elicit targeted emotions from diverse audiences in a highly effective manner. Experts from the music industry provided detailed emotion-based metatags for each track in our corpus.

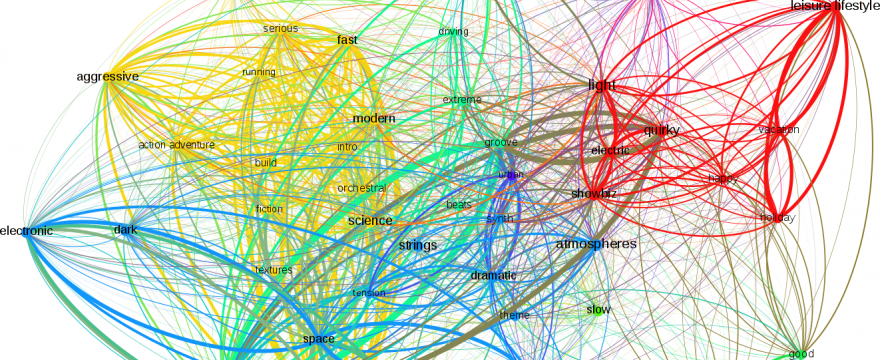

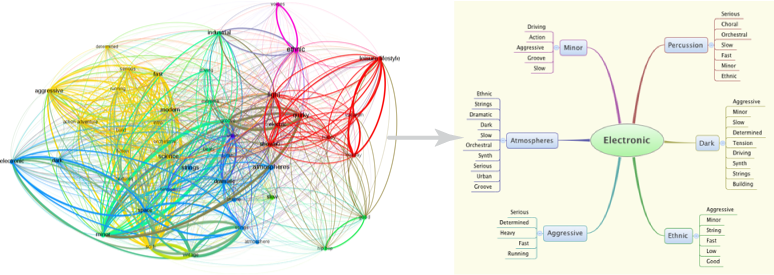

Using network analysis techniques, Isomer-Keyword built models to identify meaningful tag correlations.

High-level feature extraction from the tagged audio files allowed Isomer-Model to isolate characteristic musical patterns and trends in each track.

Finally, we correlated the aforementioned keyword descriptor combinations with our audio feature models to develop a clear understanding of which musical aspects were most responsible for communicating mood.

Results

We successfully trained feature models for 250,000+ metatag combinations over a corpus of 10,000 audio tracks. Additionally, we defined a scalable process to train feature models and autonomously assign emotion descriptions.

Our research revealed that keyword combinations were required to successfully differentiate emotional content within individual tracks. For example, “electronic” must be combined with “dark” and “sci-fi” to create an effective identifier. For keyword combinations in which correlations were identified, Isomer was able leverage feature training to create smaller, more closely related subsets of tagged classifications.