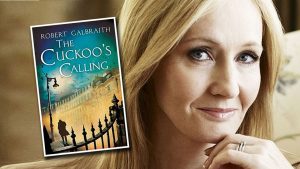

In July of 2013, Patrick Juola, a computer science/mathematics researcher at Duquesne University, uncovered the fact that J.K. Rowling was writing detective novels under an assumed name. Juola used simple statistical methods (distribution-based, primarily) to analyze the length, frequency, and relative placement of words and n-grams. Results were compared against numerous writing samples and presto, Robert Galbraith was found to be J.K. Rowling.

In July of 2013, Patrick Juola, a computer science/mathematics researcher at Duquesne University, uncovered the fact that J.K. Rowling was writing detective novels under an assumed name. Juola used simple statistical methods (distribution-based, primarily) to analyze the length, frequency, and relative placement of words and n-grams. Results were compared against numerous writing samples and presto, Robert Galbraith was found to be J.K. Rowling.

Incredibly, this fairly simple approach produced useful results regarding authorship (and in a very limited sense, style), and the algorithms never parsed the meaning of a single word! Let’s say that again — meaning was never addressed.

This is encouraging news for the MIR community, where similar results are sought after by many researchers. However, as I see it, a critical difference between the approach of most MIR projects and the work of ‘forensic linguists’ like Juola is the understanding and availability of grammatical morphemes.

The Challenges of Music as Language

Much of current MIR research focuses on uncovering trends and correlations in data provided by raw features. This is especially true when dealing with audio, and has, in some cases, produced useful results. But features derived from DSP do not constitute aspects of musical grammar, and the limited success of so many feature-based projects supports the suggestion that we may be overlooking an important piece of the puzzle.

Musical language is abstract in the sense that it doesn’t contain lexical morphemes — units of grammar that carry with them specific meaning(s). This freedom from restrictive definitions is arguably one of music’s most compelling features, but it also makes parsing its largely self-referential and often emergent grammar extremely challenging.

Within musicological, theoretical, and cognitive research circles, it has been shown that fundamental principles of musical grammar, free of cultural associations, do in fact exist, especially when events are considered in context. What’s more, this built-in lexical syntax may be at least partly responsible for allowing us to differentiate between noise and musical sounds. In my view, it is here that we must direct our energies.

Bridging the gap between raw audio features and musically meaningful morphemes is no simple task, but one that I believe is essential to opening the path to many desirable MIR tasks. As such, extracting musically relevant morphemes is a primary force behind the design and architecture of the Isomer code base.

The Power of Multiple Representations

In the case of audio input, Isomer separates feature data into four musical components: melody, harmony, rhythm and timbre. Crucially, it also interprets the data to begin building musical context around individual observations. But most notable is Isomer’s ability to create multiple abstracted representations from a single audio source.

The power of implementing a series of interpreted abstractions is that audio feature data can be represented multiple times at varying depths from the original observations, providing a variety of ways to view and examine the model. For example, determining the location of perceptually important event onsets, ‘beats’, or perceptual pulse points may be more quickly and accurately calculated from Isomer’s normalized representation rather than the raw feature data. In other cases, the detection of more expansive musical elements like harmony and harmonic rhythm may benefit significantly from a more general reduction of the raw DSP data.

From stylistic identification to model-based music composition, there are many challenges facing the MIR community that continue to remain out of reach using current approaches. In addition to offering greater flexibility as a research tool, I believe these design features of Isomer’s architecture will do much to bring us closer to a more meaningful set of data upon which to work.

Leave a Reply